Configuring and managing virtualization

Setting up your host, creating and administering virtual machines, and understanding virtualization features in Red Hat Enterprise Linux 8

Abstract

Providing feedback on Red Hat documentation

We appreciate your input on our documentation. Please let us know how we could make it better. To do so:

- For simple comments on specific passages, make sure you are viewing the documentation in the Multi-page HTML format. Highlight the part of text that you want to comment on. Then, click the Add Feedback pop-up that appears below the highlighted text, and follow the displayed instructions.

For submitting more complex feedback, create a Bugzilla ticket:

- Go to the Bugzilla website.

- As the Component, use Documentation.

- Fill in the Description field with your suggestion for improvement. Include a link to the relevant part(s) of documentation.

- Click Submit Bug.

Chapter 1. Virtualization in RHEL 8 - an overview

If you are unfamiliar with the concept of virtualization or its implementation in Linux, the following sections provide a general overview of virtualization in RHEL 8: its basics, advantages, components, and other possible virtualization solutions provided by Red Hat.

1.1. What is virtualization in RHEL 8?

Red Hat Enterprise Linux 8 (RHEL 8) provides the virtualization functionality. This means that with the help of virtualization software, a machine running RHEL 8 can host multiple virtual machines (VMs), also referred to as guests. Guests use the host’s physical hardware and computing resources to run a separate, virtualized operating system (guest OS) as a user-space process on the host’s operating system.

In other words, virtualization makes it possible to have operating systems within operating systems.

VMs enable you to safely test software configurations and features, run legacy software, or optimize the workload efficiency of your hardware. For more information on the benefits, see Section 1.2, “Advantages of virtualization”.

For more information on what virtualization is, see the Red Hat Customer Portal.

To try out virtualization in RHEL 8, see Chapter 2, Getting started with virtualization in RHEL 8.

In addition to RHEL 8 virtualization, Red Hat offers a number of specialized virtualization solutions, each with a different user focus and features. For more information, see Section 1.5, “Red Hat virtualization solutions”.

1.2. Advantages of virtualization

Using virtual machines (VMs) has the following benefits in comparison to using physical machines:

Flexible and fine-grained allocation of resources

A VM runs on a host machine, which is usually physical, and physical hardware can also be assigned for the guest OS to use. However, the allocation of physical resources to the VM is done in software, and is therefore very flexible. A VM uses a configurable fraction of the host memory, CPUs, or storage space, and that configuration can specify very fine-grained resource requests.

For example, what the guest OS sees as its disk can be represented as a file on the host file system, and the size of that disk is much less constrained than the available sizes for physical disks.

Software-controlled configurations

All of a VM’s configuration is saved as data on the host, and under software control. Therefore, a VM can easily be created, removed, cloned, migrated, operated remotely, or connected to remote storage.

In addition, the current state of the VM can be backed up as a snapshot at any time. A snapshot can then be loaded to restore the system to the saved state.

Separation from the host

A guest runs on a virtualized kernel, separate from the host OS. This means that any OS can be installed on a VM, and that even if the guest OS becomes unstable or is compromised, the host is not affected in any way.

NoteNot all operating systems are supported as a guest OS in a RHEL 8 host. For details, see Section 11.2, “Recommended features in RHEL 8 virtualization”.

Space and cost efficiency

A single physical machine can host a large number of VMs. Therefore, it avoids the need for multiple physical machines to do the same tasks, and thus lowers the space, power, and maintenance requirements associated with physical hardware.

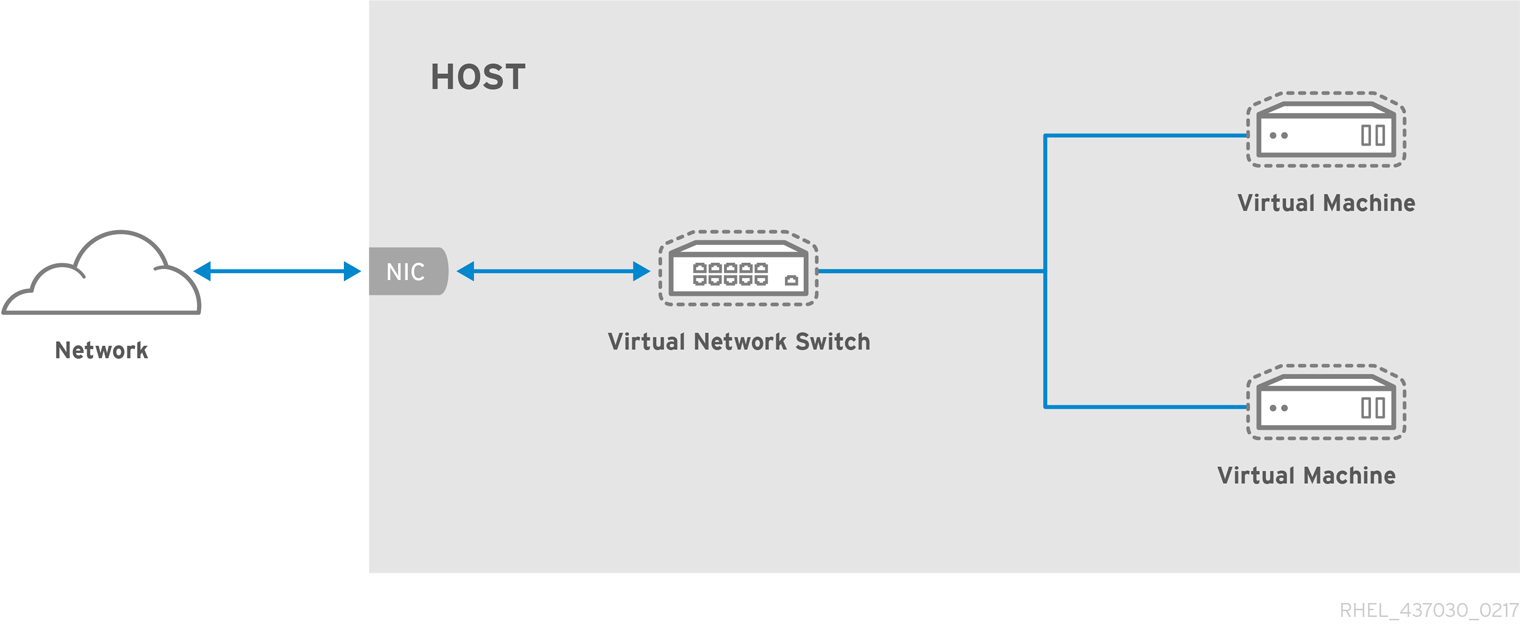

1.3. RHEL 8 virtual machine components and their interaction

Virtualization in RHEL 8 consists of the following principal software components:

Hypervisor

The basis of creating virtual machines (VMs) in RHEL 8 is the hypervisor, a software layer that controls hardware and enables running multiple operating systems on a host machine.

The hypervisor includes the Kernel-based Virtual Machine (KVM) kernel module and virtualization kernel drivers, such as virtio and vfio. These components ensure that the Linux kernel on the host machine provides resources for virtualization to user-space software.

At the user-space level, the QEMU emulator simulates a complete virtualized hardware platform that the guest operating system can run in, and manages how resources are allocated on the host and presented to the guest.

In addition, the libvirt software suite serves as a management and communication layer, making QEMU easier to interact with, enforcing security rules, and providing a number of additional tools for configuring and running guests.

XML configuration

A host-based XML configuration file (also known as a domain XML file) describes a specific VM. It includes:

- Metadata such as the name of the VM, time zone, and other information about the VM.

- A description of the devices in the VM, including virtual CPUs (vCPUS), storage devices, input/output devices, network interface cards, and other hardware, real and virtual.

- VM settings such as the maximum amount of memory it can use, restart settings, and other settings about the behavior of the VM.

Component interaction

When a VM is started, the hypervisor creates an instance of the VM as a user-space process on the host based on the XML configuration. The hypervisor also makes the VM process accessible to the host-based interfaces, such as the virsh, virt-install, and guestfish commands, or the web console GUI.

When these virtualization tools are used, libvirt translates their input into instructions for QEMU. QEMU communicates the instructions to KVM, which ensures that the kernel appropriately assigns the resources necessary to carry out the instructions. As a result, QEMU can execute the corresponding user-space changes, such as creating or modifying a guest, or performing an action in the guest’s operating system.

While QEMU is an essential component of the architecture, it is not intended to be used directly on RHEL 8 systems, due to security concerns. Therefore, using qemu-* commands is not supported by Red Hat, and it is highly recommended to interact with QEMU using libvirt.

For more information on the host-based interfaces, see Tools and interfaces for virtualization management in RHEL 8.

Figure 1.1. RHEL 8 virtualization architecture

1.4. Tools and interfaces for virtualization management in RHEL 8

You can manage virtualization in RHEL 8 using the command-line interface (CLI) or several graphical user interface (GUIs).

Command-line interface

The CLI is the most powerful method of managing virtualization in RHEL 8. Prominent CLI commands for virtualization management include:

virsh - A versatile virtualization command-line utility and shell with a great variety of purposes, depending on the provided arguments. For example:

-

Starting and shutting down a virtual machine -

virsh startandvirsh shutdown -

Listing available virtual machines (VMs) -

virsh list -

Creating a virtual machine from a configuration file -

virsh create -

Entering a virtualization shell -

virsh

For more information, see the

virsh(1)man page.-

Starting and shutting down a virtual machine -

-

virt-install- A CLI utility for creating new virtual machines. For more information, see thevirt-install(1)man page. -

virt-xml- A utility for editing the configuration of a virtual machine. -

guestfish- A utility for examining and modifying virtual machine disk images. For more information, see theguestfish(1)man page.

For instructions on basic virtualization management with CLI, see Chapter 2, Getting started with virtualization in RHEL 8.

Graphical interfaces

You can use the following GUIs to manage virtualization in RHEL 8:

The RHEL 8 web console, also known as Cockpit, provides a remotely accessible and easy to use graphical user interface for managing VMs and virtualization hosts.

For instructions on basic virtualization management with the web console, see Chapter 5, Using the RHEL 8 web console for managing virtual machines.

The Virtual Machine Manager (virt-manager) application provides a specialized GUI for managing VMs and virtualization hosts.

ImportantAlthough still supported in RHEL 8, virt-manager has been deprecated. The RHEL 8 web console is intended to become its replacement in a subsequent release. It is, therefore, recommended that you get familiar with the web console for managing virtualization in a GUI. However, in RHEL 8, some features may only be accessible from either virt-manager or the command line.

The Gnome Boxes application is a lightweight graphical interface to view and access VMs and remote systems. Gnome Boxes is primarily designed for use on desktop systems.

ImportantGnome Boxes is provided as a part of the GNOME desktop environment and is supported on RHEL 8, but Red Hat recommends that you use the web console for managing virtualization in a GUI.

1.5. Red Hat virtualization solutions

The following Red Hat products are built on top of RHEL 8 virtualization features and expand the KVM virtualization capabilities available in RHEL 8. In addition, many limitations of RHEL 8 virtualization do not apply to these products:

- Red Hat Virtualization (RHV)

RHV is designed for enterprise-class scalability and performance, and enables management of your entire virtual infrastructure, including hosts, virtual machines, networks, storage, and users from a centralized graphical interface.

For information about the differences between virtualization in Red Hat Enterprise Linux and Red Hat Virtualization, see the Red Hat Customer Portal.

Red Hat Virtualization can be used by enterprises running large deployments or mission-critical applications. Examples of large deployments suited to Red Hat Virtualization include databases, trading platforms, and messaging systems that must run continuously without any downtime.

For more information about Red Hat Virtualization, see the Red Hat Customer Portal or the Red Hat Virtualization documentation suite.

To download a fully supported 60-day evaluation version of Red Hat Virtualization, see https://access.redhat.com/products/red-hat-virtualization/evaluation

- Red Hat OpenStack Platform (RHOSP)

Red Hat OpenStack Platform offers an integrated foundation to create, deploy, and scale a secure and reliable public or private OpenStack cloud.

For more information about Red Hat OpenStack Platform, see the Red Hat Customer Portal or the Red Hat OpenStack Platform documentation suite.

To download a fully supported 60-day evaluation version of Red Hat OpenStack Platform, see https://access.redhat.com/products/red-hat-openstack-platform/evaluation

In addition, specific Red Hat products provide operating-system-level virtualization, also known as containerization:

- Containers are isolated instances of the host OS and operate on top of an existing OS kernel. For more information on containers, see the Red Hat Customer Portal.

- Containers do not have the versatility of KVM virtualization, but are more lightweight and flexible to handle. For a more detailed comparison, see the Introduction to Linux Containers.

Chapter 2. Getting started with virtualization in RHEL 8

To start using virtualization in RHEL 8, follow the steps below. The default method for this is the command-line interface (CLI), but for user convenience, some of the steps can be completed in the the web console GUI.

- Enable the virtualization module and install the virtualization packages - see Section 2.1, “Enabling virtualization in RHEL 8”.

Create a guest virtual machine:

Start the guest virtual machine:

Connect to the guest virtual machine:

The web console currently provides only a subset of guest management functions for virtual machines, so using the command line is recommended for advanced use of virtualization in RHEL 8.

2.1. Enabling virtualization in RHEL 8

To use virtualization in RHEL 8, you must enable the virtualization module, install virtualization packages, and ensure your system is configured to host virtual machines.

Prerequisites

- Red Hat Enterprise Linux 8 must be installed and registered on your host machine.

Your system must meet the following hardware requirements to work as a virtualization host:

- The architecture of your host machine supports KVM virtualization.

The following system resources are available, or more:

- 6 GB free disk space for the host, plus another 6 GB for each intended guest

- 2 GB of RAM for the host, plus another 2 GB for each intended guest

Procedure

Install the packages in the virtualization module:

# yum module install virtInstall the

virt-installpackage:# yum install virt-installVerify that your system is prepared to be a virtualization host:

# virt-host-validate [...] QEMU: Checking for device assignment IOMMU support : PASS QEMU: Checking if IOMMU is enabled by kernel : WARN (IOMMU appears to be disabled in kernel. Add intel_iommu=on to kernel cmdline arguments) LXC: Checking for Linux >= 2.6.26 : PASS [...] LXC: Checking for cgroup 'blkio' controller mount-point : PASS LXC: Checking if device /sys/fs/fuse/connections exists : FAIL (Load the 'fuse' module to enable /proc/ overrides)If all virt-host-validate checks return a

PASSvalue, your system is prepared for creating virtual machines.If any of the checks return a

FAILvalue, follow the displayed instructions to fix the problem.If any of the checks return a

WARNvalue, consider following the displayed instructions to improve virtualization capabilities.

Additional information

Note that if virtualization is not supported by your host CPU, virt-host-validate generates the following output:

QEMU: Checking for hardware virtualization: FAIL (Only emulated CPUs are available, performance will be significantly limited)

However, attempting to create virtual machines on such a host system will fail, rather than have performance problems.

2.2. Creating virtual machines

To create a virtual machine (VM) in RHEL 8, use the command line interface or the the RHEL 8 web console.

2.2.1. Prerequisites

- Virtualization must be installed and enabled on your system.

- Prior to creating VMs on your system, consider the amount of system resources you need to allocate to your VMs, such as disk space, RAM, or CPUs. The recommended values may vary significantly depending on the intended tasks and workload of the VMs.

2.2.2. Creating virtual machines using the command-line interface

To create a virtual machine (VM) on your RHEL 8 host using the virt-install utility, follow the instructions below.

Prerequisites

An operating system (OS) installation source, which can be one of the following, and can be available locally or on a network:

- An ISO image of an installation medium

- A disk image of an existing virtual machine installation

- Optionally, a Kickstart file can also be provided for faster and easier configuration of the installation.

Procedure

To create a VM and start its OS installation, use the virt-install command. In its arguments, specify at minimum:

- The name of the new machine

- The amount of allocated memory

- The number of allocated virtual CPUs (vCPUs)

- The type and size of allocated storage

- The type and location of the OS installation source

Based on the chosen installation method, the necessary options and values can vary. See below for examples:

The following creates a VM named demo-guest1 that installs the Windows 10 OS from an ISO image locally stored in the /home/username/Downloads/Win10install.iso file. This VM is also allocated with 2048 MiB of RAM, 2 vCPUs, and a 8 GiB qcow2 virtual disk is automatically configured for the VM.

# virt-install --name demo-guest1 --memory 2048 --vcpus 2 --disk size=8 --os-variant win10 --cdrom /home/username/Downloads/Win10install.isoThe following creates a VM named demo-guest2 that uses the /home/username/Downloads/rhel8.iso image to run a RHEL 8 OS from a live CD. No disk space is assigned to this VM, so changes made during the session will not be preserved. In addition, the VM is allocated with 4096 MiB of RAM and 4 vCPUs.

# virt-install --name demo-guest2 --memory 4096 --vcpus 4 --disk none --livecd --os-variant rhel8.0 --cdrom /home/username/Downloads/rhel8.isoThe following creates a RHEL 8 VM named demo-guest3 connects to an existing disk image, /home/username/backup/disk.qcow2. This is similar to physically moving a hard drive between machines, so the OS and data available to demo-guest3 are determined by how the image was handled previously. In addition, this VM is allocated with 2048 MiB of RAM and 2 vCPUs.

# virt-install --name demo-guest3 --memory 2048 --vcpus 2 --os-variant rhel8.0 --import --disk /home/username/backup/disk.qcow2Note that the

--os-variantoption is highly recommended when importing a disk image. If it is not provided, the performance of the created VM will be negatively affected.The following creates a VM named demo-guest4 that installs from the

http://example.com/OS-installURL. For the installation to start successfully, the URL must contain a working OS installation tree. In addition, the OS is automatically configured using the /home/username/ks.cfg kickstart file. This VM is also allocated with 2048 MiB of RAM, 2 vCPUs, and a 16 GiB qcow2 virtual disk.# virt-install --name demo-guest4 --memory 2048 --vcpus 2 --disk size=16 --os-variant rhel8.0 --location http://example.com/OS-install --initrd-inject /home/username/ks.cfg --extra-args="ks=file:/ks.cfg console=tty0 console=ttyS0,115200n8"The following creates a VM named demo-guest5 that installs from a

RHEL8.isofile in text-only mode, without graphics. It connects the guest console to the serial console. The VM has 16384 MiB of memory, 16 vCPUs, and 280 GiB disk. This kind of installation is useful when connecting to a host over a slow network link.# virt-install --name demo-guest5 --memory 16384 --vcpus 16 --disk size=280 --os-variant rhel8.0 --location RHEL8.iso --nographics --extra-args='console=ttyS0'The following creates a VM named demo-guest6, which has the same configuration as demo-guest5, but resides on the 10.0.0.1 remote host.

# virt-install --connect qemu+ssh://root@10.0.0.1/system --name demo-guest6 --memory 16384 --vcpus 16 --disk size=280 --os-variant rhel8.0 --location RHEL8.iso --nographics --extra-args='console=ttyS0'

If the the VM is created successfully, a virt-viewer window opens with a graphical console of the VM and starts the guest OS installation.

A number of other options can be specified for virt-install to further configure the VM and its OS installation. For details, see the virt-install man page.

2.2.3. Creating virtual machines using the RHEL 8 web console

To create a VM on the host machine to which the web console is connected, follow the instructions below.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

- Before creating VMs, consider the amount of system resources you need to allocate to your VMs, such as disk space, RAM, or CPUs. The recommended values may vary significantly depending on the intended tasks and workload of the VMs.

A locally available operating system (OS) installation source, which can be one of the following:

- An ISO image of an installation medium

- A disk image of an existing guest installation

Procedure

Click in the Virtual Machines interface of the RHEL 8 web console.

The Create New Virtual Machine dialog appears.

Enter the basic configuration of the virtual machine you want to create.

- Connection - The connection to the host to be used by the virtual machine.

- Name - The name of the virtual machine.

- Installation Source Type - The type of the installation source: Filesystem, URL

- Installation Source - The path or URL that points to the installation source.

- OS Vendor - The vendor of the virtual machine’s operating system.

- Operating System - The virtual machine’s operating system.

- Memory - The amount of memory with which to configure the virtual machine.

- Storage Size - The amount of storage space with which to configure the virtual machine.

- Immediately Start VM - Whether or not the virtual machine will start immediately after it is created.

Click .

The virtual machine is created. If the Immediately Start VM checkbox is selected, the VM will immediately start and begin installing the guest operating system.

You must install the operating system the first time the virtual machine is run.

Additional resources

- For information on installing an operating system on a virtual machine, see Section 5.3.2, “Installing operating systems using the RHEL 8 web console”.

2.3. Starting virtual machines

To start a virtual machine (VM) in RHEL 8, you can use the command line interface or the web console GUI.

2.3.1. Prerequisites

- Before a VM can be started, it must be created and ideally also installed with an OS. For instruction to do so, see Section 2.2, “Creating virtual machines”.

2.3.2. Starting a virtual machine using the command-line interface

To start a local VM using the command-line interface, use the

virsh startcommand:# virsh start demo-guest1 Domain demo-guest1 startedIf the VM is on a remote host, use QEMU+SSH connection to the host. For example, the following starts the

demo-guest1VM on the 192.168.123.123 host:# virsh -c qemu+ssh://root@192.168.123.123/system start demo-guest1 root@192.168.123.123's password: Last login: Mon Feb 18 07:28:55 2019 Domain demo-guest1 startedNote that managing VMs on remote host can be simplified by modifying your libvirt and SSH configuration.

2.3.3. Powering up virtual machines in the RHEL 8 web console

If a VM is in the shut off state, you can start it using the RHEL 8 web console.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

-

Click a row with the name of the virtual machine you want to start.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click .

The virtual machine starts.

Additional resources

- For information on shutting down a virtual machine, see Section 5.5.2, “Powering down virtual machines in the RHEL 8 web console”.

- For information on restarting a virtual machine, see Section 5.5.3, “Restarting virtual machines using the RHEL 8 web console”.

- For information on sending a non-maskable interrupt to a virtual machine, see Section 5.5.4, “Sending non-maskable interrupts to VMs using the RHEL 8 web console”.

2.4. Connecting to virtual machines

To interact with a virtual machine (VM) in RHEL 8, you need to connect to it by doing one of the following:

- When using the RHEL 8 web console interface, use the Virtual Machines pane in the web console interface. For more information, see Section 2.4.2, “Viewing the virtual machine graphical console in the RHEL 8 web console”

- If you need to interact with a VM graphical display without using the RHEL 8 web console, use the Virt Viewer application. For details, see Section 2.4.3, “Opening a virtual machine graphical console using Virt Viewer”

- When a graphical display is not possible or not necessary, use an SSH terminal connection.

- When the virtual machine is not reachable from your system by using a network, use the virsh console.

If the VMs to which you are connecting are on a remote host rather than a local one, you can optionally also configure your system for more convenient access to remote hosts.

2.4.1. Prerequisites

2.4.2. Viewing the virtual machine graphical console in the RHEL 8 web console

You can view the graphical console of a selected virtual machine in the RHEL 8 web console. The virtual machine console shows the graphical output of the virtual machine.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

- Ensure that both the host and the VM support a graphical interface.

Procedure

-

Click a row with the name of the virtual machine whose graphical console you want to view.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click .

The graphical console appears in the web interface.

You can interact with the virtual machine console using the mouse and keyboard in the same manner you interact with a real machine. The display in the virtual machine console reflects the activities being performed on the virtual machine.

The server on which the RHEL 8 web console is running can intercept specific key combinations, such as Ctrl+Alt+F1, preventing them from being sent to the virtual machine.

To send such key combinations, click the menu and select the key sequence to send.

For example, to send the Ctrl+Alt+F1 combination to the virtual machine, click the menu and select the menu entry.

Additional Resources

- For details on viewing the graphical console in a remote viewer, see Section 5.10.2, “Viewing virtual machine consoles in remote viewers using the RHEL 8 web console”.

- For details on viewing the serial console in the RHEL 8 web console, see Section 5.10.3, “Viewing the virtual machine serial console in the RHEL 8 web console”.

2.4.3. Opening a virtual machine graphical console using Virt Viewer

To connect to a graphical console of a KVM virtual machine (VM) and open it in the Virt Viewer desktop application, follow the procedure below.

Prerequisites

- Your system, as well as the virtual machine you are connecting to, must support graphical displays.

- If the target VM is located on a remote host, connection and root access privileges to the host are needed.

- [Optional] If the target VM is located on a remote host, set up your libvirt and SSH for more convenient access to remote hosts.

Procedure

To connect to a local VM, use the following command and replace guest-name with the name of the VM you want to connect to:

# virt-viewer guest-nameTo connect to a remote VM, use the

virt-viewercommand with the SSH protocol. For example, the following command connects as root to a VM called guest-name, located on remote system 10.0.0.1. The connection also requires root authentication for 10.0.0.1.# virt-viewer --direct --connect qemu+ssh://root@10.0.0.1/system guest-name root@10.0.0.1's password:

If the connection works correctly, the VM display is shown in the Virt Viewer window.

You can interact with the VM console using the mouse and keyboard in the same manner you interact with a real machine. The display in the VM console reflects the activities being performed on the VM.

Additional resources

-

For more information on using Virt Viewer, see the

virt-viewerman page. - Connecting to VMs on a remote host can be simplified by modifying your libvirt and SSH configuration.

- For management of virtal machines in an interactive GUI in RHEL 8, you can use the web console interface. For more information, see Section 5.10, “Interacting with virtual machines using the RHEL 8 web console”.

2.4.4. Connecting to a virtual machine using SSH

To interact with the terminal of a virtual machine (VM) using the SSH connection protocol, follow the procedure below:

Prerequisites

- Network connection and root access privileges to the target VM.

The

libvirt-nsscomponent must be installed and enabled on the VM’s host. If it is not, do the following:Install the

libvirt-nsspackage:# yum install libvirt-nssEdit the

/etc/nsswitch.conffile and addlibvirt_guestto thehostsline:[...] passwd: compat shadow: compat group: compat hosts: files libvirt_guest dns [...]

- If the target VM is located on a remote host, connection and root access privileges to the host are also needed.

Procedure

Optional: When connecting to a remote guest, SSH into its physical host first. The following example demonstrates connecting to a host machine 10.0.0.1 using its root credentials:

# ssh root@10.0.0.1 root@10.0.0.1's password: Last login: Mon Sep 24 12:05:36 2018 root~#Use the VM’s name and user access credentials to connect to it. For example, the following connects to to the "testguest1" VM using its root credentials:

# ssh root@testguest1 root@testguest1's password: Last login: Wed Sep 12 12:05:36 2018 root~]#NoteIf you do not know the virtual machine’s name, you can list all VMs available on the host using the

virsh list --allcommand:# virsh list --all Id Name State ---------------------------------------------------- 2 testguest1 running - testguest2 shut off

2.4.5. Opening a virtual machine serial console

Using the virsh console command, it is possible to connect to the serial console of a virtual machine (VM).

This is useful when the VM:

- Does not provide VNC or SPICE protocols, and thus does not offer video display for GUI tools.

- Does not does not have network connection, and thus cannot be interacted with using SSH.

Prerequisites

The VM must have the serial console configured in its kernel command line. To verify this, the

cat /proc/cmdlinecommand output on the VM should include console=ttyS0. For example:# cat /proc/cmdline BOOT_IMAGE=/vmlinuz-3.10.0-948.el7.x86_64 root=/dev/mapper/rhel-root ro console=tty0 console=ttyS0,9600n8 rd.lvm.lv=rhel/root rd.lvm.lv=rhel/swap rhgbIf the serial console is not set up properly on a VM, using virsh console to connect to the VM connects you to an unresponsive guest console. However, you can still exit the unresponsive console by using the Ctrl+] shortcut.

To set up serial console on the VM, do the following:

-

On the VM, edit the

/etc/default/grubfile and add console=ttyS0 to the line that starts with GRUB_CMDLINE_LINUX. Clear the kernel options that may prevent your changes from taking effect

# grub2-editenv - unset kerneloptsReload the Grub configuration:

# grub2-mkconfig -o /boot/grub2/grub.cfg Generating grub configuration file ... Found linux image: /boot/vmlinuz-3.10.0-948.el7.x86_64 Found initrd image: /boot/initramfs-3.10.0-948.el7.x86_64.img [...] done- Reboot the VM.

-

On the VM, edit the

Procedure

On your host system, use the

virsh consolecommand. The following example connects to the guest1 virtual machine, if the libvirt driver supports safe console handling:# virsh console guest1 --safe Connected to domain guest1 Escape character is ^] Subscription-name Kernel 3.10.0-948.el7.x86_64 on an x86_64 localhost login:

- You can interact with the virsh console in the same way as with a standard command-line interface.

Additional resources

- For more information about the VM serial console, see the virsh man page.

2.4.6. Setting up easy access to remote virtualization hosts

When managing VMs on a remote host system using libvirt utilities, it is recommended to use the -c qemu+ssh://root@hostname/system syntax. For example, to use the virsh list command as root on the 10.0.0.1 host:

# virsh -c qemu+ssh://root@10.0.0.1/system list

root@10.0.0.1's password:

Last login: Mon Feb 18 07:28:55 2019

Id Name State

---------------------------------

1 remote-guest runningHowever, for convenience, you can remove the need to specify the connection details in full by modifying your SSH and libvirt configuration. For example, you will be able to do:

# virsh -c remote-host list

root@10.0.0.1's password:

Last login: Mon Feb 18 07:28:55 2019

Id Name State

---------------------------------

1 remote-guest runningTo enable this improvement, follow the instructions below.

Procedure

Edit or create the

~/.ssh/configfile and add the following to it, where host-alias is a shortened name associated with a specific remote host, and hosturl is the URL address of the host.Host host-alias User root Hostname hosturl

For example, the following sets up the tyrannosaurus alias for root@10.0.0.1:

Host tyrannosaurus User root Hostname 10.0.0.1Edit or create the

/etc/libvirt/libvirt.conffile, and add the following, where qemu-host-alias is a host alias that QEMU and libvirt utilities will associate with the intended host:uri_aliases = [ "qemu-host-alias=qemu+ssh://host-alias/system", ]

For example, the following uses the tyrannosaurus alias configured in the previous step to set up the t-rex alias, which stands for

qemu+ssh://10.0.0.1/system:uri_aliases = [ "t-rex=qemu+ssh://tyrannosaurus/system", ]

As a result, you can manage remote VMs by using libvirt-based utilities on the local system and adding add

-c qemu-host-aliasto the commands. This automatically performs the commands over SSH on the remote host.For example, the following lists VMs on the 10.0.0.1 remote host, the connection to which was set up as t-rex in the previous steps:

$ virsh -c t-rex list root@10.0.0.1's password: Last login: Mon Feb 18 07:28:55 2019 Id Name State --------------------------------- 1 velociraptor running[Optional] If you want to use libvirt utilities exclusively on a single remote host, you can also set a specific connection as the default target for libvirt-based utilities. To do so, edit the

/etc/libvirt/libvirt.conffile and set the value of theuri_defaultparameter to qemu-host-alias. For example, the following uses the t-rex host alias set up in the previous steps as a default libvirt target.# These can be used in cases when no URI is supplied by the application # (@uri_default also prevents probing of the hypervisor driver). # uri_default = "t-rex"

As a result, all libvirt-based commands will automatically be performed on the specified remote host.

$ virsh list root@10.0.0.1's password: Last login: Mon Feb 18 07:28:55 2019 Id Name State --------------------------------- 1 velociraptor runningHowever, this is not recommended if you also want to manage VMs on your local host or on different remote hosts.

Additional resources

When connecting to a remote host, it is also possible to avoid having to provide the root password to the remote system. To do so, do one or more of the following:

- Set up key-based SSH access to the remote host.

- Use SSH connection multiplexing to connect to the remote system.

- Set up a kerberos authentication ticket on the remote system.

Utilities that can use the

-c(or--connect) option and the remote host access configuration described above include:- virt-install

- virt-viewer

- virsh

- virt-manager

2.5. Shutting down virtual machines

To shut down a running virtual machine in Red Hat Enterprise Linux 8, use the command line interface or the web console GUI.

2.5.1. Shutting down a virtual machine using the command-line interface

To shut down a responsive virtual machine (VM), do one of the following:

- use a shutdown command appropriate to the guest OS while connected to the guest

use the

virsh shutdowncommand on the host:If the VM is on a local host:

# virsh shutdown demo-guest1 Domain demo-guest1 is being shutdownIf the VM is on a remote host, in this example 10.0.0.1:

# virsh -c qemu+ssh://root@10.0.0.1/system shutdown demo-guest1 root@10.0.0.1's password: Last login: Mon Feb 18 07:28:55 2019 Domain demo-guest1 is being shutdown

To force a guest to shut down, for example if it has become unresponsive, use the virsh destroy command on the host:

# virsh destroy demo-guest1

Domain demo-guest1 destroyed

The virsh destroy command does not actually delete or remove the guest configuration or disk images. It only destroys the running guest instance. However, in rare cases, this command may cause corruption of the guest’s file system, so using virsh destroy is only recommended if all other shutdown methods have failed.

2.5.2. Powering down virtual machines in the RHEL 8 web console

If a virtual machine is in the running state, you can shut it down using the RHEL 8 web console.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

-

Click a row with the name of the virtual machine you want to shut down.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click .

The virtual machine shuts down.

If the virtual machine does not shut down, click the arrow next to the button and select .

Additional resources

- For information on starting a virtual machine, see Section 5.5.1, “Powering up virtual machines in the RHEL 8 web console”.

- For information on restarting a virtual machine, see Section 5.5.3, “Restarting virtual machines using the RHEL 8 web console”.

- For information on sending a non-maskable interrupt to a virtual machine, see Section 5.5.4, “Sending non-maskable interrupts to VMs using the RHEL 8 web console”.

Chapter 3. Getting started with virtualization in RHEL 8 on IBM POWER

When using RHEL 8 on IBM POWER8 or POWER9 hardware, it is possible to use KVM virtualization. However, when enabling the KVM hypervisor on your system, extra steps are needed compared to virtualization on AMD64 and Intel64 architectures. Certain RHEL 8 virtualization features also have different or restricted functionality on IBM POWER.

Apart from the information in the following sections, using virtualization on IBM POWER works the same as on AMD64 and Intel 64. Therefore, you can see other RHEL 8 virtualization documentation for more information about using virtualization on IBM POWER.

3.1. Enabling virtualization on IBM POWER

To set up a KVM hypervisor and be able to create virtual machines (VMs) on an IBM POWER8 or IBM POWER9 system running RHEL 8, follow the instructions below.

Prerequisites

- RHEL 8 is installed and registered on your host machine.

The following system resources are available, or more:

- 6 GB free disk space for the host, plus another 6 GB for each intended guest.

- 2 GB of RAM for the host, plus another 2 GB for each intended guest.

Your CPU machine type must support IBM POWER virtualization.

To verify this, query the platform information in your

/proc/cpuinfofile.# grep ^platform /proc/cpuinfo/ platform : PowerNVIf the output of this command includes the

PowerNVentry, you are running a PowerNV machine type and can use virtualization on IBM POWER.

Procedure

Load the KVM-HV kernel module

# modprobe kvm_hvVerify that the KVM kernel module is loaded

# lsmod | grep kvmIf KVM loaded successfully, the output of this command includes

kvm_hv.Install the packages in the virtualization module:

# yum module install virtInstall the

virt-installpackage:# yum install virt-installVerify that your system is prepared to be a virtualization host:

# virt-host-validate [...] QEMU: Checking if device /dev/vhost-net exists : PASS QEMU: Checking if device /dev/net/tun exists : PASS QEMU: Checking for cgroup 'memory' controller support : PASS QEMU: Checking for cgroup 'memory' controller mount-point : PASS [...] QEMU: Checking for cgroup 'blkio' controller support : PASS QEMU: Checking for cgroup 'blkio' controller mount-point : PASS QEMU: Checking if IOMMU is enabled by kernel : PASSIf all virt-host-validate checks return a

PASSvalue, your system is prepared for creating virtual machines.If any of the checks return a

FAILvalue, follow the displayed instructions to fix the problem.If any of the checks return a

WARNvalue, consider following the displayed instructions to improve virtualization capabilities.

Additional information

Note that if virtualization is not supported by your host CPU, virt-host-validate generates the following output:

QEMU: Checking for hardware virtualization: FAIL (Only emulated CPUs are available, performance will be significantly limited)

However, attempting to create VMs on such a host system will fail, rather than have performance problems.

3.2. How virtualization on IBM POWER differs from AMD64 and Intel 64

KVM virtualization in RHEL 8 on IBM POWER systems is different from KVM on AMD64 and Intel 64 systems in a number of aspects, notably:

- Memory requirements

- VMs on IBM POWER consume more memory. Therefore, the recommended minimum memory allocation for a virtual machine (VM) on an IBM POWER host is 2GB RAM.

- Display protocols

The SPICE protocol is not supported on IBM POWER systems. To display the graphical output of a VM, use the

VNCprotocol. In addition, only the following virtual graphics card devices are supported:-

vga- only supported in-vga stdmode and not in-vga cirrusmode. -

virtio-vga -

virtio-gpu

-

- SMBIOS

- SMBIOS configuration is not available

- Memory allocation errors

POWER8 VMs, including compatibility mode VMs, may fail with an error similar to:

qemu-kvm: Failed to allocate KVM HPT of order 33 (try smaller maxmem?): Cannot allocate memory

This is significantly more likely to occur on VMs that use RHEL 7.3 and prior as the guest OS.

To fix the problem, increase the CMA memory pool available for the guest’s hashed page table (HPT) by adding

kvm_cma_resv_ratio=memoryto the host’s kernel command line, where memory is the percentage of the host memory that should be reserved for the CMA pool (defaults to 5).- Huge pages

Transparent huge pages (THPs) do not provide any notable performance benefits on IBM POWER8 VMs. However, IBM POWER9 VMs can benefit from THPs as expected.

In addition, the size of static huge pages on IBM POWER8 systems are 16 MiB and 16 GiB, as opposed to 2 MiB and 1 GiB on AMD64, Intel 64, and IBM POWER9. As a consequence, to migrate a VM configured with static huge pages from an IBM POWER8 host to an IBM POWER9 host, you must first set up 1GiB huge pages on the VM.

- kvm-clock

-

The

kvm-clockservice does not have to be configured for time management in VMs on IBM POWER9. - pvpanic

IBM POWER9 systems do not support the

pvpanicdevice. However, an equivalent functionality is available and activated by default on this architecture. To enable it in a VM, use the<on_crash>XML configuration element with thepreservevalue.In addition, make sure to remove the

<panic>element from the<devices>section, as its presence can lead to the VM failing to boot on IBM POWER systems.- Single-threaded host

- On IBM POWER8 systems, the host machine must run in single-threaded mode to support VMs. This is automatically configured if the qemu-kvm packages are installed. However, VMs running on single-threaded hosts can still use multiple threads.

- Peripheral devices

A number of peripheral devices supported on AMD64 and Intel 64 systems are not supported on IBM POWER systems, or a different device is supported as a replacement.

-

Devices used for PCI-E hierarchy, including

ioh3420andxio3130-downstream, are not supported. This functionality is replaced by multiple independent PCI root bridges provided by thespapr-pci-host-bridgedevice. - UHCI and EHCI PCI controllers are not supported. Use OHCI and XHCI controllers instead.

-

IDE devices, including the virtual IDE CD-ROM (

ide-cd) and the virtual IDE disk (ide-hd), are not supported. Use thevirtio-scsiandvirtio-blkdevices instead. -

Emulated PCI NICs (

rtl8139) are not supported. Use thevirtio-netdevice instead. -

Sound devices, including

intel-hda,hda-output, andAC97, are not supported. -

USB redirection devices, including

usb-redirandusb-tablet, are not supported.

-

Devices used for PCI-E hierarchy, including

- v2v and p2v

-

The

virt-v2vandvirt-p2vutilities are only supported on the AMD64 and Intel 64 architecture. Because of this, they are not provided on IBM POWER.

Chapter 4. Getting started with virtualization in RHEL 8 on IBM Z

When using RHEL 8 on IBM Z hardware, it is possible to use KVM virtualization. However, when enabling the KVM hypervisor on your system, extra steps are needed compared to virtualization on AMD64 and Intel 64 architectures. Certain RHEL 8 virtualization features also have different or restricted functionality on IBM Z.

Apart from the information in the following sections, using virtualization on IBM Z works the same as on AMD64 and Intel 64. Therefore, you can see other RHEL 8 virtualization documentation for more information about using virtualization on IBM Z.

4.1. Enabling virtualization on IBM Z

To set up a KVM hypervisor and be able to create virtual machines (VMs) on an IBM Z system running RHEL 8, follow the instructions below.

Prerequisites

- RHEL 8 is installed and registered on your host machine.

The following system resources are available, or more:

- 6 GB free disk space for the host, plus another 6 GB for each intended guest.

- 2 GB of RAM for the host, plus another 2 GB for each intended guest.

- Your IBM Z host system needs to use a z13 CPU or later.

RHEL 8 has to be installed on a logical partition (LPAR). In addition, the LPAR must support the start-interpretive execution (SIE) virtualization functions.

To verify this, search for

siein your/proc/cpuinfofile.# grep sie /proc/cpuinfo/ features : esan3 zarch stfle msa ldisp eimm dfp edat etf3eh highgprs te sie

Procedure

Load the KVM kernel module:

# modprobe kvmVerify that the KVM kernel module is loaded:

# lsmod | grep kvmIf KVM loaded successfully, the output of this command includes

kvm:Install the packages in the virtualization module:

# yum module install virtInstall the

virt-installpackage:# yum install virt-installVerify that your system is prepared to be a virtualization host:

# virt-host-validate [...] QEMU: Checking if device /dev/kvm is accessible : PASS QEMU: Checking if device /dev/vhost-net exists : PASS QEMU: Checking if device /dev/net/tun exists : PASS QEMU: Checking for cgroup 'memory' controller support : PASS QEMU: Checking for cgroup 'memory' controller mount-point : PASS [...]If all virt-host-validate checks return a

PASSvalue, your system is prepared for creating virtual machines.If any of the checks return a

FAILvalue, follow the displayed instructions to fix the problem.If any of the checks return a

WARNvalue, consider following the displayed instructions to improve virtualization capabilities.

Additional information

Note that if virtualization is not supported by your host CPU, virt-host-validate generates the following output:

QEMU: Checking for hardware virtualization: FAIL (Only emulated CPUs are available, performance will be significantly limited)

However, attempting to create VMs on such a host system will fail, rather than have performance problems.

4.2. How virtualization on IBM Z differs from AMD64 and Intel 64

KVM virtualization in RHEL 8 on IBM Z systems differs from KVM on AMD64 and Intel 64 systems in the following:

- No graphical output

-

Displaying the VM graphical output is not possible when connecting to the VM using the VNC protocol. This is due to the

gnome-desktoputility not being supported on IBM Z. - PCI and USB devices

Virtual PCI and USB devices are not supported on IBM Z. This also means that

virtio-*-pcidevices are unsupported andvirtio-*-ccwdevices should be used instead. For example, usevirtio-net-ccwinstead ofvirtio-net-pci.Note that direct attachment of PCI devices, also known as PCI passthrough, is supported.

- Device boot order

IBM Z does not support the

<boot dev='device'>XML configuration element. To define device boot order, use the<boot order='number'>element in the<devices>section of the XML. For example:<devices> <disk type='file' snapshot='external'> <driver name="tap" type="aio" cache="default"/> <source file='/var/lib/xen/images/fv0' startupPolicy='optional'> <seclabel relabel='no'/> </source> <target dev='hda' bus='ide'/> <iotune> <total_bytes_sec>10000000</total_bytes_sec> <read_iops_sec>400000</read_iops_sec> <write_iops_sec>100000</write_iops_sec> </iotune> <boot order='2'/> [...] </disk>NoteUsing

<boot order='number'>for boot order management is preferred also on AMD64 and Intel 64 hosts.- vfio-ap

- VMs on an IBM Z host can use the vfio-ap cryptographic device passthrough, which is not supported on any other architectures.

- SMBIOS

- SMBIOS configuration is not available on IBM Z.

- Watchdog devices

If using watchdog devices in your VM on an IBM Z host, use the

diag288model. For example:<devices> <watchdog model='diag288' action='poweroff'/> </devices>

- kvm-clock

-

The

kvm-clockservice is specific to AMD64 and Intel 64 systems, and does not have to be configured for VM time management on IBM Z. - v2v and p2v

-

The

virt-v2vandvirt-p2vutilities are only supported on the AMD64 and Intel 64 architecture. Because of this, they are not provided on IBM Z.

Chapter 5. Using the RHEL 8 web console for managing virtual machines

To manage virtual machines in a graphical interface, you can use the Virtual Machines pane in the RHEL 8 web console.

The following sections describe the web console’s virtualization management capabilities and provide instructions for using them.

5.1. Overview of virtual machine management using the RHEL 8 web console

The RHEL 8 web console is a web-based interface for system administration. With the installation of a web console plug-in, the web console can be used to manage virtual machines (VMs) on the servers to which the web console can connect. It provides a graphical view of VMs on a host system to which the web console can connect, and allows monitoring system resources and adjusting configuration with ease.

Using the RHEL 8 web console for VM management, you can do the following:

- Create and delete VMs

- Install operating systems on VMs

- Run and shut down VMs

- View information about VMs

- Create and attach disks to VMs

- Configure virtual CPU settings for VMs

- Manage virtual network interfaces

- Interact with VMs using VM consoles

The Virtual Machine Manager (virt-manager) application is still supported in RHEL 8 but has been deprecated. The RHEL 8 web console is intended to become its replacement in a subsequent release. It is, therefore, recommended that you get familiar with the web console for managing virtualization in a GUI. However, in RHEL 8, some features may only be accessible from either virt-manager or the command line.

5.2. Setting up the RHEL 8 web console to manage virtual machines

Before using the RHEL 8 web console to manage VMs, you must install the web console virtual machine plug-in.

Prerequisites

Ensure that the web console is installed on your machine.

$ yum info cockpit Installed Packages Name : cockpit [...]If the web console is not installed, see the Managing systems using the RHEL 8 web console guide for more information about installing the web console.

Procedure

Install the

cockpit-machinesplug-in.# yum install cockpit-machinesIf the installation is successful, appears in the web console side menu.

5.3. Creating virtual machines and installing guest operating systems using the RHEL 8 web console

The following sections provide information on how to use the RHEL 8 web console to create virtual machines (VMs) and install operating systems on VMs.

5.3.1. Creating virtual machines using the RHEL 8 web console

To create a VM on the host machine to which the web console is connected, follow the instructions below.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

- Before creating VMs, consider the amount of system resources you need to allocate to your VMs, such as disk space, RAM, or CPUs. The recommended values may vary significantly depending on the intended tasks and workload of the VMs.

A locally available operating system (OS) installation source, which can be one of the following:

- An ISO image of an installation medium

- A disk image of an existing guest installation

Procedure

Click in the Virtual Machines interface of the RHEL 8 web console.

The Create New Virtual Machine dialog appears.

Enter the basic configuration of the virtual machine you want to create.

- Connection - The connection to the host to be used by the virtual machine.

- Name - The name of the virtual machine.

- Installation Source Type - The type of the installation source: Filesystem, URL

- Installation Source - The path or URL that points to the installation source.

- OS Vendor - The vendor of the virtual machine’s operating system.

- Operating System - The virtual machine’s operating system.

- Memory - The amount of memory with which to configure the virtual machine.

- Storage Size - The amount of storage space with which to configure the virtual machine.

- Immediately Start VM - Whether or not the virtual machine will start immediately after it is created.

Click .

The virtual machine is created. If the Immediately Start VM checkbox is selected, the VM will immediately start and begin installing the guest operating system.

You must install the operating system the first time the virtual machine is run.

Additional resources

- For information on installing an operating system on a virtual machine, see Section 5.3.2, “Installing operating systems using the RHEL 8 web console”.

5.3.2. Installing operating systems using the RHEL 8 web console

The first time a virtual machine loads, you must install an operating system on the virtual machine.

Prerequisites

Before using the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

- A VM on which to install an operating system.

Procedure

-

Click .

The installation routine of the operating system runs in the virtual machine console.

If the Immediately Start VM checkbox in the Create New Virtual Machine dialog is checked, the installation routine of the operating system starts automatically when the virtual machine is created.

If the installation routine fails, the virtual machine must be deleted and recreated.

5.4. Deleting virtual machines using the RHEL 8 web console

You can delete a virtual machine and its associated storage files from the host to which the RHEL 8 web console is connected.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

In the Virtual Machines interface of the RHEL 8 web console, click the name of the VM you want to delete.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine.

Click .

A confirmation dialog appears.

- [Optional] To delete all or some of the storage files associated with the virtual machine, select the checkboxes next to the storage files you want to delete.

-

Click .

The virtual machine and any selected associated storage files are deleted.

5.5. Powering up and powering down virtual machines using the RHEL 8 web console

Using the RHEL 8 web console, you can run, shut down, and restart virtual machines. You can also send a non-maskable interrupt to a virtual machine that is unresponsive.

5.5.1. Powering up virtual machines in the RHEL 8 web console

If a VM is in the shut off state, you can start it using the RHEL 8 web console.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

-

Click a row with the name of the virtual machine you want to start.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click .

The virtual machine starts.

Additional resources

- For information on shutting down a virtual machine, see Section 5.5.2, “Powering down virtual machines in the RHEL 8 web console”.

- For information on restarting a virtual machine, see Section 5.5.3, “Restarting virtual machines using the RHEL 8 web console”.

- For information on sending a non-maskable interrupt to a virtual machine, see Section 5.5.4, “Sending non-maskable interrupts to VMs using the RHEL 8 web console”.

5.5.2. Powering down virtual machines in the RHEL 8 web console

If a virtual machine is in the running state, you can shut it down using the RHEL 8 web console.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

-

Click a row with the name of the virtual machine you want to shut down.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click .

The virtual machine shuts down.

If the virtual machine does not shut down, click the arrow next to the button and select .

Additional resources

- For information on starting a virtual machine, see Section 5.5.1, “Powering up virtual machines in the RHEL 8 web console”.

- For information on restarting a virtual machine, see Section 5.5.3, “Restarting virtual machines using the RHEL 8 web console”.

- For information on sending a non-maskable interrupt to a virtual machine, see Section 5.5.4, “Sending non-maskable interrupts to VMs using the RHEL 8 web console”.

5.5.3. Restarting virtual machines using the RHEL 8 web console

If a virtual machine is in the running state, you can restart it using the RHEL 8 web console.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

-

Click a row with the name of the virtual machine you want to restart.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click .

The virtual machine shuts down and restarts.

If the virtual machine does not restart, click the arrow next to the button and select .

Additional resources

- For information on starting a virtual machine, see Section 5.5.1, “Powering up virtual machines in the RHEL 8 web console”.

- For information on shutting down a virtual machine, see Section 5.5.2, “Powering down virtual machines in the RHEL 8 web console”.

- For information on sending a non-maskable interrupt to a virtual machine, see Section 5.5.4, “Sending non-maskable interrupts to VMs using the RHEL 8 web console”.

5.5.4. Sending non-maskable interrupts to VMs using the RHEL 8 web console

Sending a non-maskable interrupt (NMI) may cause an unresponsive running VM to respond or shut down. For example, you can send the Ctrl+Alt+Del NMI to a VM that is not responsive.

Prerequisites

Before using the RHEL 8 web console to manage VMs, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

-

Click a row with the name of the virtual machine to which you want to send an NMI.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click the arrow next to the button and select .

An NMI is sent to the virtual machine.

Additional resources

- For information on starting a virtual machine, see Section 5.5.1, “Powering up virtual machines in the RHEL 8 web console”.

- For information on restarting a virtual machine, see Section 5.5.3, “Restarting virtual machines using the RHEL 8 web console”.

- For information on shutting down a virtual machine, see Section 5.5.2, “Powering down virtual machines in the RHEL 8 web console”.

5.6. Viewing virtual machine information using the RHEL 8 web console

Using the RHEL 8 web console, you can view information about the virtual storage and VMs to which the web console is connected.

5.6.1. Viewing a virtualization overview in the RHEL 8 web console

The following describes how to view an overview of the available virtual storage and the VMs to which the web console session is connected.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

To view information about the available storage and the virtual machines to which the web console is attached.

-

Click in the web console’s side menu.

Information about the available storage and the virtual machines to which the web console session is connected appears.

The information includes the following:

- Storage Pools - The number of storage pools that can be accessed by the web console and their state.

- Networks - The number of networks that can be accessed by the web console and their state.

- Name - The name of the virtual machine.

- Connection - The type of libvirt connection, system or session.

- State - The state of the virtual machine.

Additional resources

- For information on viewing detailed information about the storage pools the web console session can access, see Section 5.6.2, “Viewing storage pool information using the RHEL 8 web console”.

- For information on viewing basic information about a selected virtual machine to which the web console session is connected, see Section 5.6.3, “Viewing basic virtual machine information in the RHEL 8 web console”.

- For information on viewing resource usage for a selected virtual machine to which the web console session is connected, see Section 5.6.4, “Viewing virtual machine resource usage in the RHEL 8 web console”.

- For information on viewing disk information about a selected virtual machine to which the web console session is connected, see Section 5.6.5, “Viewing virtual machine disk information in the RHEL 8 web console”.

- For information on viewing virtual network interface card information about a selected virtual machine to which the web console session is connected, see Section 5.6.6, “Viewing virtual NIC information in the RHEL 8 web console”.

5.6.2. Viewing storage pool information using the RHEL 8 web console

The following describes how to view detailed storage pool information about the storage pools that the web console session can access.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

To view storage pool information:

Click at the top of the Virtual Machines tab. The Storage Pools window appears showing a list of configured storage pools.

The information includes the following:

- Name - The name of the storage pool.

- Size - The size of the storage pool.

- Connection - The connection used to access the storage pool.

- State - The state of the storage pool.

Click a row with the name of the storage whose information you want to see.

The row expands to reveal the Overview pane with following information about the selected storage pool:

- Path - The path to the storage pool.

- Persistent - Whether or not the storage pool is persistent.

- Autostart - Whether or not the storage pool starts automatically.

- Type - The storage pool type.

To view a list of storage volumes created from the storage pool, click .

The Storage Volumes pane appears showing a list of configured storage volumes with their sizes and the amount of space used.

Additional resources

- For information on viewing information about all of the virtual machines to which the web console session is connected, see Section 5.6.1, “Viewing a virtualization overview in the RHEL 8 web console”.

- For information on viewing basic information about a selected virtual machine to which the web console session is connected, see Section 5.6.3, “Viewing basic virtual machine information in the RHEL 8 web console”.

- For information on viewing resource usage for a selected virtual machine to which the web console session is connected, see Section 5.6.4, “Viewing virtual machine resource usage in the RHEL 8 web console”.

- For information on viewing disk information about a selected virtual machine to which the web console session is connected, see Section 5.6.5, “Viewing virtual machine disk information in the RHEL 8 web console”.

- For information on viewing virtual network interface card information about a selected virtual machine to which the web console session is connected, see Section 5.6.6, “Viewing virtual NIC information in the RHEL 8 web console”.

5.6.3. Viewing basic virtual machine information in the RHEL 8 web console

The following describes how to view basic information about a selected virtual machine to which the web console session is connected.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

To view basic information about a selected virtual machine.

-

Click a row with the name of the virtual machine whose information you want to see.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine.

If another tab is selected, click .

The information includes the following:

- Memory - The amount of memory assigned to the virtual machine.

- Emulated Machine - The machine type emulated by the virtual machine.

vCPUs - The number of virtual CPUs configured for the virtual machine.

NoteTo see more detailed virtual CPU information and configure the virtual CPUs configured for a virtual machine, see Section 5.7, “Managing virtual CPUs using the RHEL 8 web console”.

- Boot Order - The boot order configured for the virtual machine.

- CPU Type - The architecture of the virtual CPUs configured for the virtual machine.

- Autostart - Whether or not autostart is enabled for the virtual machine.

Additional resources

- For information on viewing information about all of the virtual machines to which the web console session is connected, see Section 5.6.1, “Viewing a virtualization overview in the RHEL 8 web console”.

- For information on viewing information about the storage pools to which the web console session is connected, see Section 5.6.2, “Viewing storage pool information using the RHEL 8 web console”.

- For information on viewing resource usage for a selected virtual machine to which the web console session is connected, see Section 5.6.4, “Viewing virtual machine resource usage in the RHEL 8 web console”.

- For information on viewing disk information about a selected virtual machine to which the web console session is connected, see Section 5.6.5, “Viewing virtual machine disk information in the RHEL 8 web console”.

- For information on viewing virtual network interface card information about a selected virtual machine to which the web console session is connected, see Section 5.6.6, “Viewing virtual NIC information in the RHEL 8 web console”.

5.6.4. Viewing virtual machine resource usage in the RHEL 8 web console

The following describes how to view resource usage information about a selected virtual machine to which the web console session is connected.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

To view information about the memory and virtual CPU usage of a selected virtual machine.

-

Click a row with the name of the virtual machine whose information you want to see.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click .

The Usage pane appears with information about the memory and virtual CPU usage of the virtual machine.

Additional resources

- For information on viewing information about all of the virtual machines to which the web console session is connected, see Section 5.6.1, “Viewing a virtualization overview in the RHEL 8 web console”.

- For information on viewing information about the storage pools to which the web console session is connected, see Section 5.6.2, “Viewing storage pool information using the RHEL 8 web console”.

- For information on viewing basic information about a selected virtual machine to which the web console session is connected, see Section 5.6.3, “Viewing basic virtual machine information in the RHEL 8 web console”.

- For information on viewing disk information about a selected virtual machine to which the web console session is connected, see Section 5.6.5, “Viewing virtual machine disk information in the RHEL 8 web console”.

- For information on viewing virtual network interface card information about a selected virtual machine to which the web console session is connected, see Section 5.6.6, “Viewing virtual NIC information in the RHEL 8 web console”.

5.6.5. Viewing virtual machine disk information in the RHEL 8 web console

The following describes how to view disk information about a virtual machine to which the web console session is connected.

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

To view disk information about a selected virtual machine.

-

Click a row with the name of the virtual machine whose information you want to see.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. -

Click .

The Disks pane appears with information about the disks assigned to the virtual machine.

The information includes the following:

- Device - The device type of the disk.

- Target - The controller type of the disk.

- Used - The amount of the disk that is used.

- Capacity - The size of the disk.

- Bus - The bus type of the disk.

- Readonly - Whether or not the disk is read-only.

- Source - The disk device or file.

Additional resources

- For information on viewing information about all of the virtual machines to which the web console session is connected, see Section 5.6.1, “Viewing a virtualization overview in the RHEL 8 web console”.

- For information on viewing information about the storage pools to which the web console session is connected, see Section 5.6.2, “Viewing storage pool information using the RHEL 8 web console”.

- For information on viewing basic information about a selected virtual machine to which the web console session is connected, see Section 5.6.3, “Viewing basic virtual machine information in the RHEL 8 web console”.

- For information on viewing resource usage for a selected virtual machine to which the web console session is connected, see Section 5.6.4, “Viewing virtual machine resource usage in the RHEL 8 web console”.

- For information on viewing virtual network interface card information about a selected virtual machine to which the web console session is connected, see Section 5.6.6, “Viewing virtual NIC information in the RHEL 8 web console”.

5.6.6. Viewing virtual NIC information in the RHEL 8 web console

The following describes how to view information about the virtual network interface cards (vNICs) on a selected virtual machine:

Prerequisites

To be able to use the RHEL 8 web console to manage virtual machines, you must install the web console virtual machine plug-in.

If the web console plug-in is not installed, see Section 5.2, “Setting up the RHEL 8 web console to manage virtual machines” for information about installing the web console virtual machine plug-in.

Procedure

To view information about the virtual network interface cards (NICs) on a selected virtual machine.

-

Click a row with the name of the virtual machine whose information you want to see.

The row expands to reveal the Overview pane with basic information about the selected virtual machine and controls for shutting down and deleting the virtual machine. Click .

The Networks pane appears with information about the virtual NICs configured for the virtual machine.

The information includes the following:

- Type - The type of network interface for the virtual machine. Types include direct, network, bridge, ethernet, hostdev, mcast, user, and server.

- Model type - The model of the virtual NIC.

- MAC Address - The MAC address of the virtual NIC.

- Source - The source of the network interface. This is dependent on the network type.

- State - The state of the virtual NIC.

To edit the virtual network settings, Click . The Virtual Network Interface Settings.

- Change the Network Type and Model.